PostgreSQL, MySQL, MS SQL, BigQuery, Snowflake, Redshift, Oracle, DuckDB

In this quick start guide, we will write our first script in SQL. We will see how to connect a Windmill instance to an external SQL service and then send queries to the database using Windmill Scripts.

This tutorial covers how to create a simple script through Windmill web IDE. See the dedicated page to develop scripts locally.

Windmill supports PostgreSQL, MySQL, Microsoft SQL Server, BigQuery and Snowflake. In any case, it requires creating a dedicated resource.

Although all users can use BigQuery, Snowflake and MS SQL through resources and community-available languages (TypeScript, Python, Go, Bash etc.), only instances under Enterprise edition and cloud workspaces can use BigQuery, Snowflake, Oralce DB and MS SQL runtimes as a dedicated language.

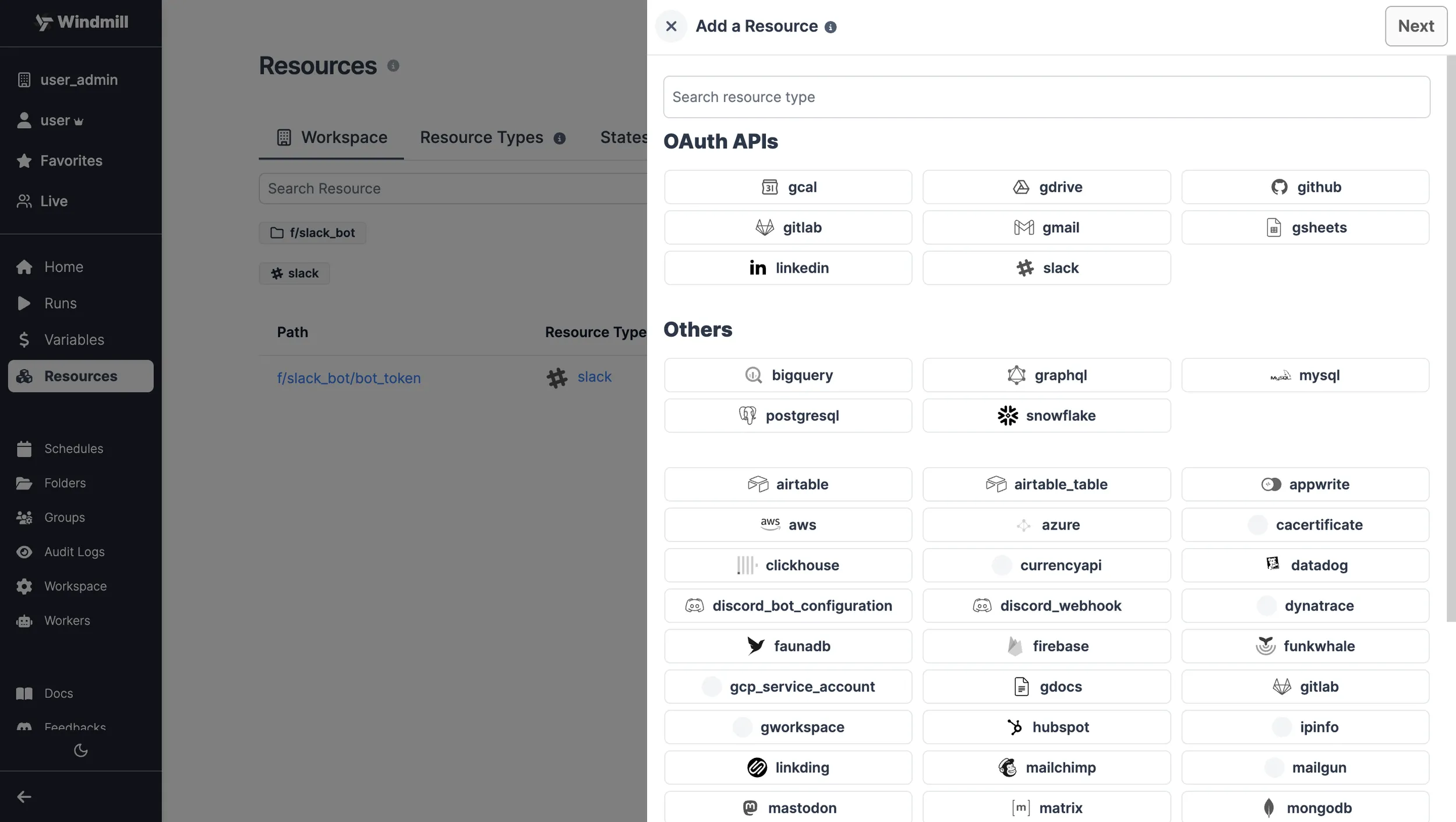

Create resource

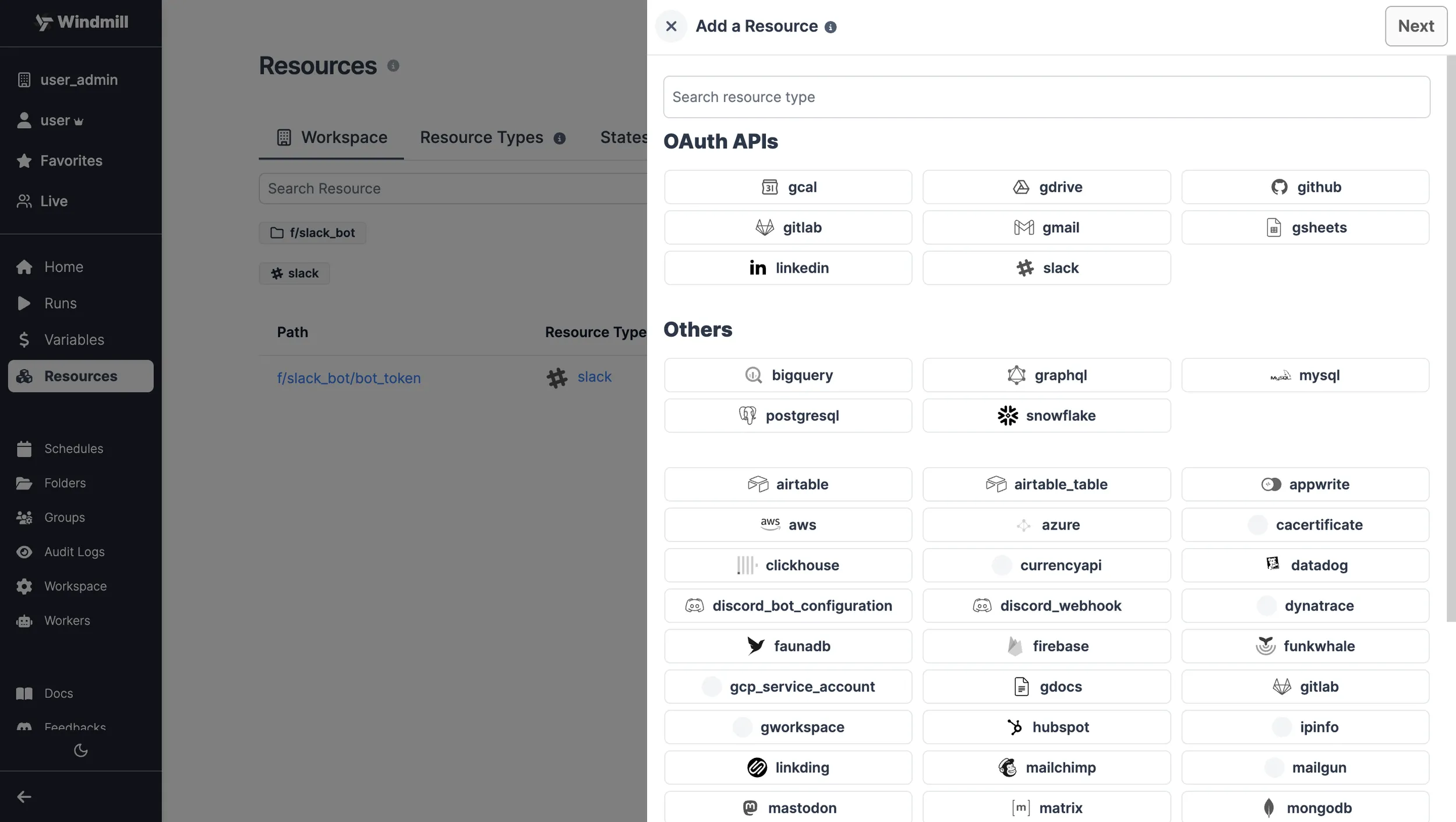

Windmill provides integrations with many different apps and services with the use of Resources. Resources are rich objects in JSON that allow to store configuration and credentials.

Each Resource has a Resource Type (PostgreSQL, MySQL, MS SQL, BigQuery, Snowflake) that defines the schema that the resource of this type needs to implement. Schemas implement the JSON Schema specification.

You can find a list of all the officially supported Resource types on Windmill Hub.

You can pin a resource to an SQL query by adding a -- database resource_path line to your script. The query will automatically use the resource without having to specify it in the autogenerated user interface.

PostgreSQL

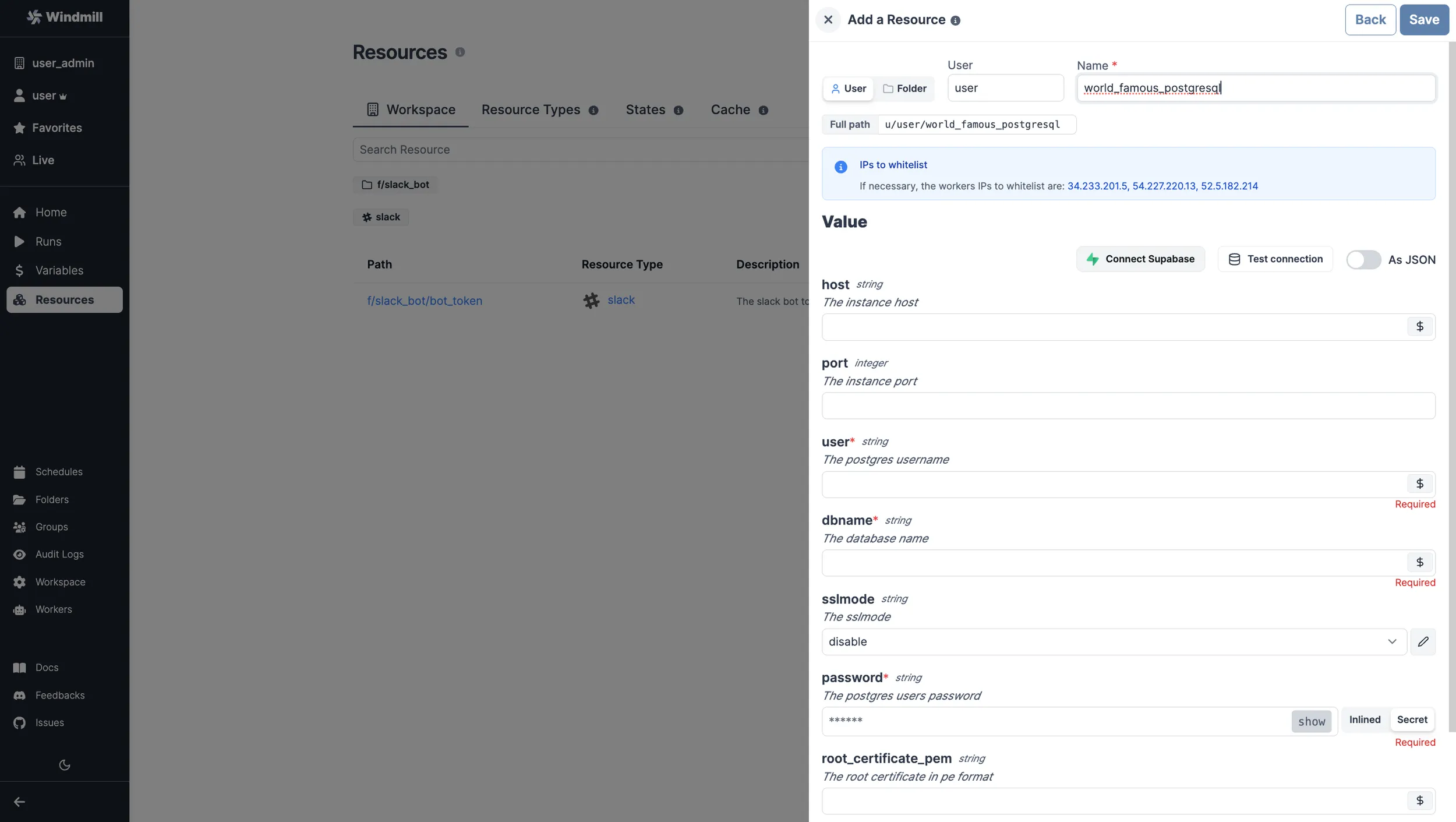

To be able to connect to a PostgreSQL instance (Supabase, Neon.tech, etc.), we'll need to define a Resource with the PostgreSQL Resource Type first.

Head to the Resources page, click on

"Add resource" in the top right corner and select the PostgreSQL type.

Fill out the form with the information of your PostgreSQL instance and "Test connection" if needed.

For testing purposes, you can use the sample PostgreSQL Resource provided to

every user. It is available under the path f/examples/demo_windmillshowcases.

PostgreSQL: Add a Supabase database

Windmill provides a wizard to easily add a Supabase database through PostgreSQL.

When creating a new PostgreSQL resource, just "Add a Supabase DB". This will lead you to a Supabase page where you need to pick your organization. Then on Windmill pick a database, fill with database password and that's it.

Use SQL to build on external APIs using Sequin

With Sequin, developers can build on top of third-party services like Salesforce or HubSpot using SQL. More details at:

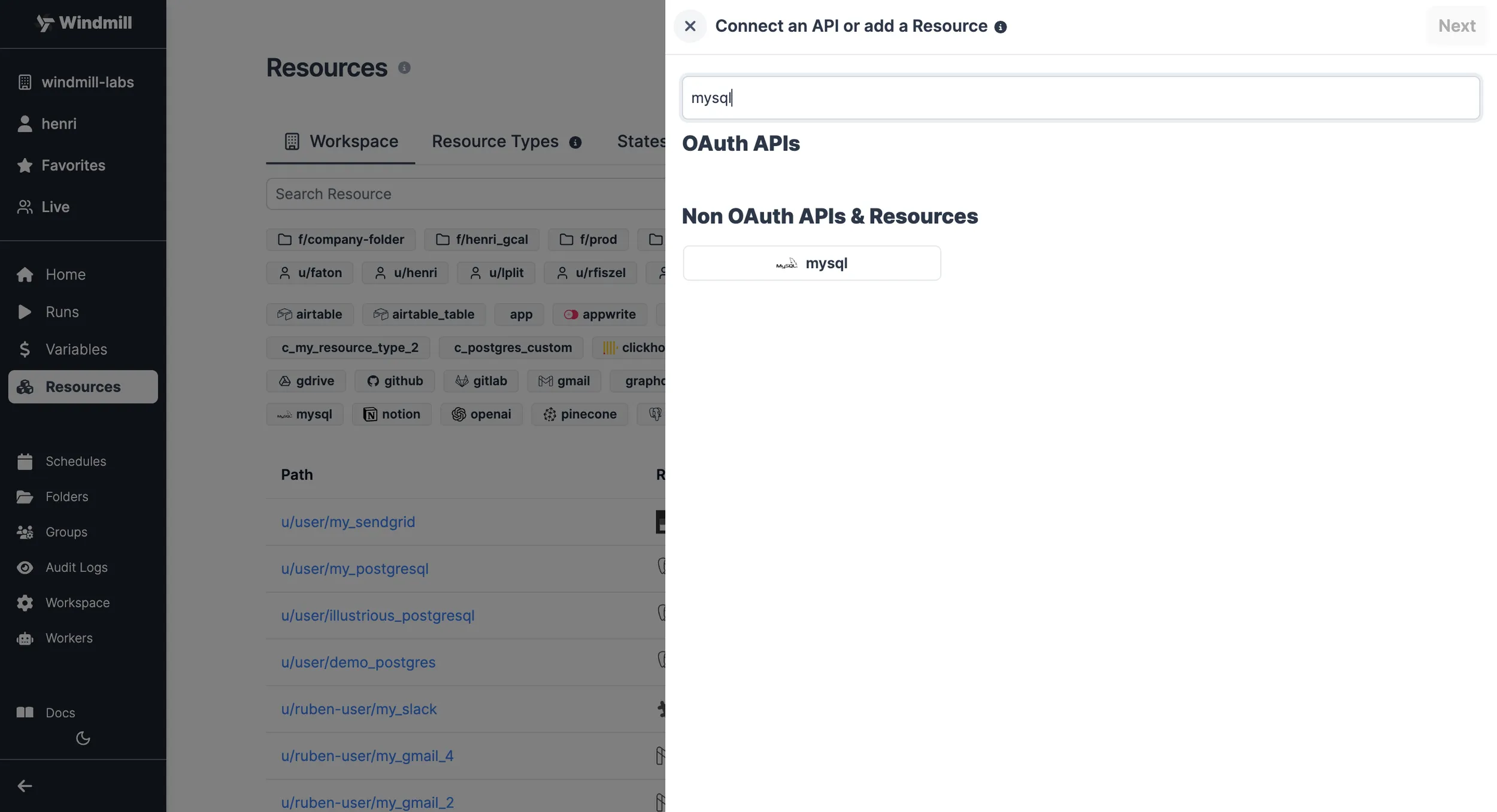

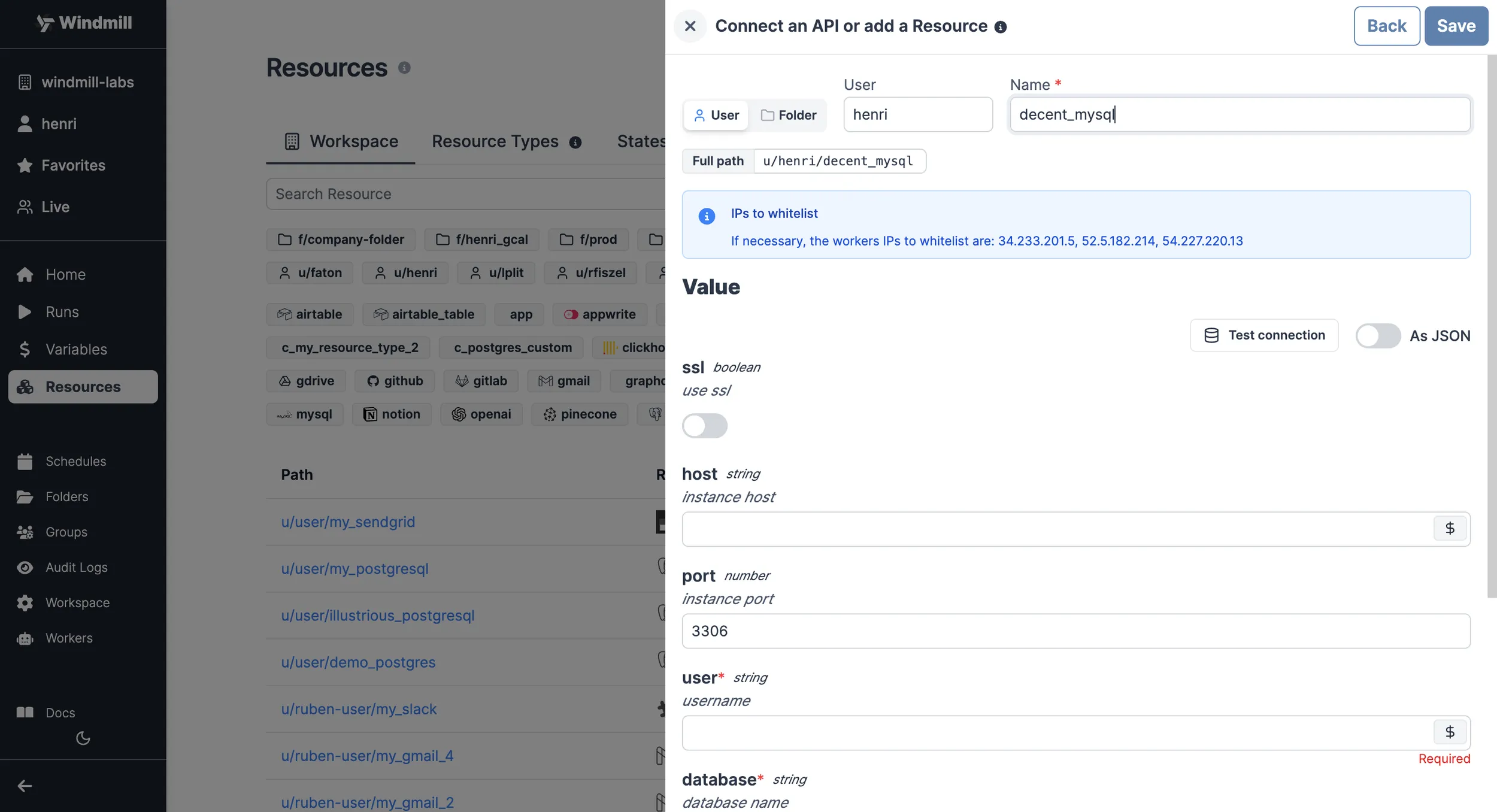

MySQL

To be able to connect to a MySQL instance, we'll need to define a Resource with the MySQL Resource Type first.

Head to the Resources page, click on

"Add resource" in the top right corner and select the MySQL type.

Fill out the form with the information of your MySQL instance and "Test connection" if needed.

| Property | Type | Description | Default | Required | Where to Find |

|---|---|---|---|---|---|

| host | string | Instance host | false | Your hosting provider's control panel or in your server's MySQL configuration file | |

| port | number | Instance port | 3306 | false | Your hosting provider's control panel or in your server's MySQL configuration file |

| user | string | Username | true | Created in MySQL (e.g., via phpMyAdmin or MySQL Workbench) or provided by your hosting | |

| database | string | Database name | true | Created in MySQL (e.g., via phpMyAdmin or MySQL Workbench) or provided by your hosting | |

| password | string | User's password | true | Created in MySQL (e.g., via phpMyAdmin or MySQL Workbench) or provided by your hosting |

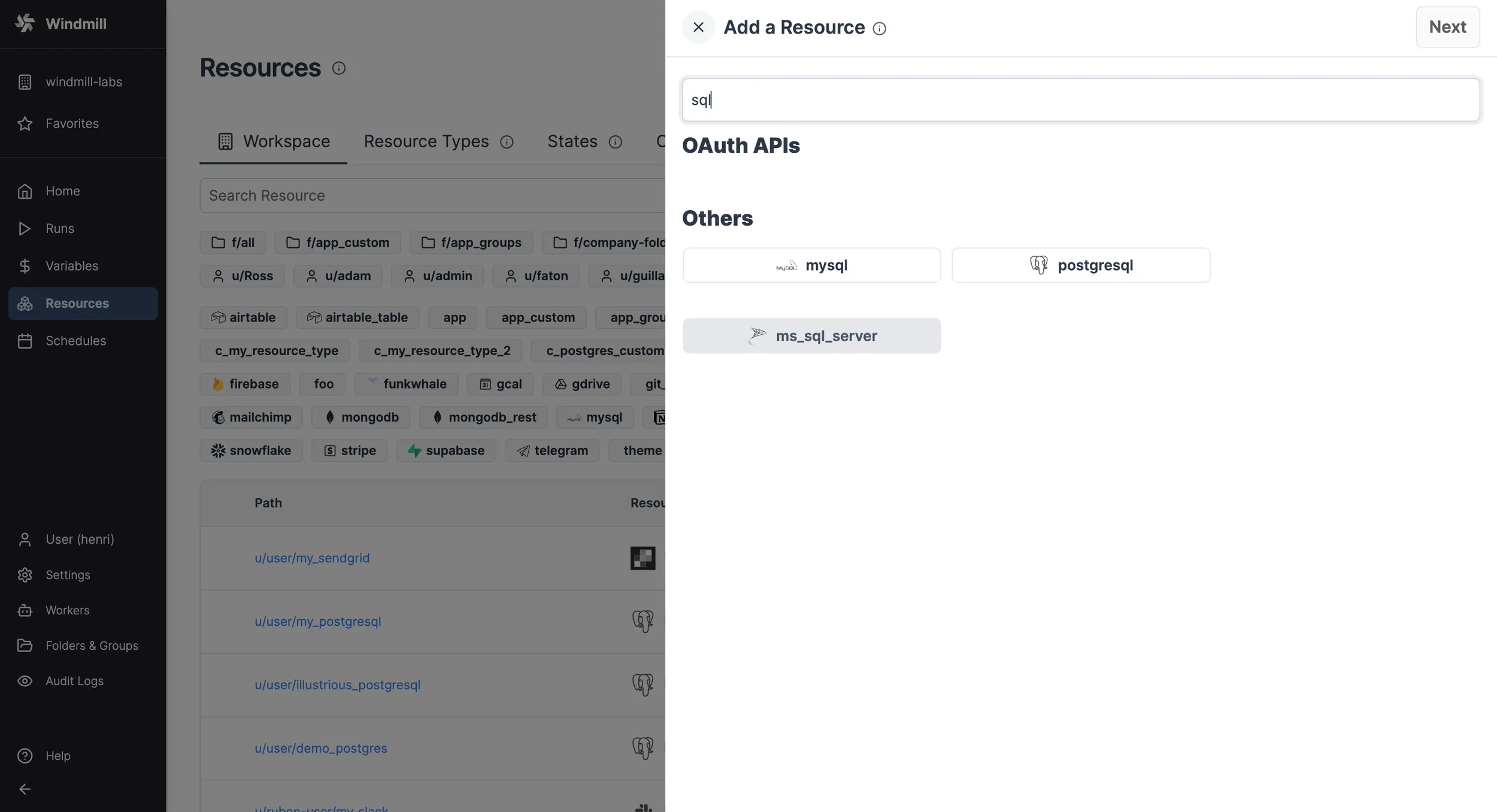

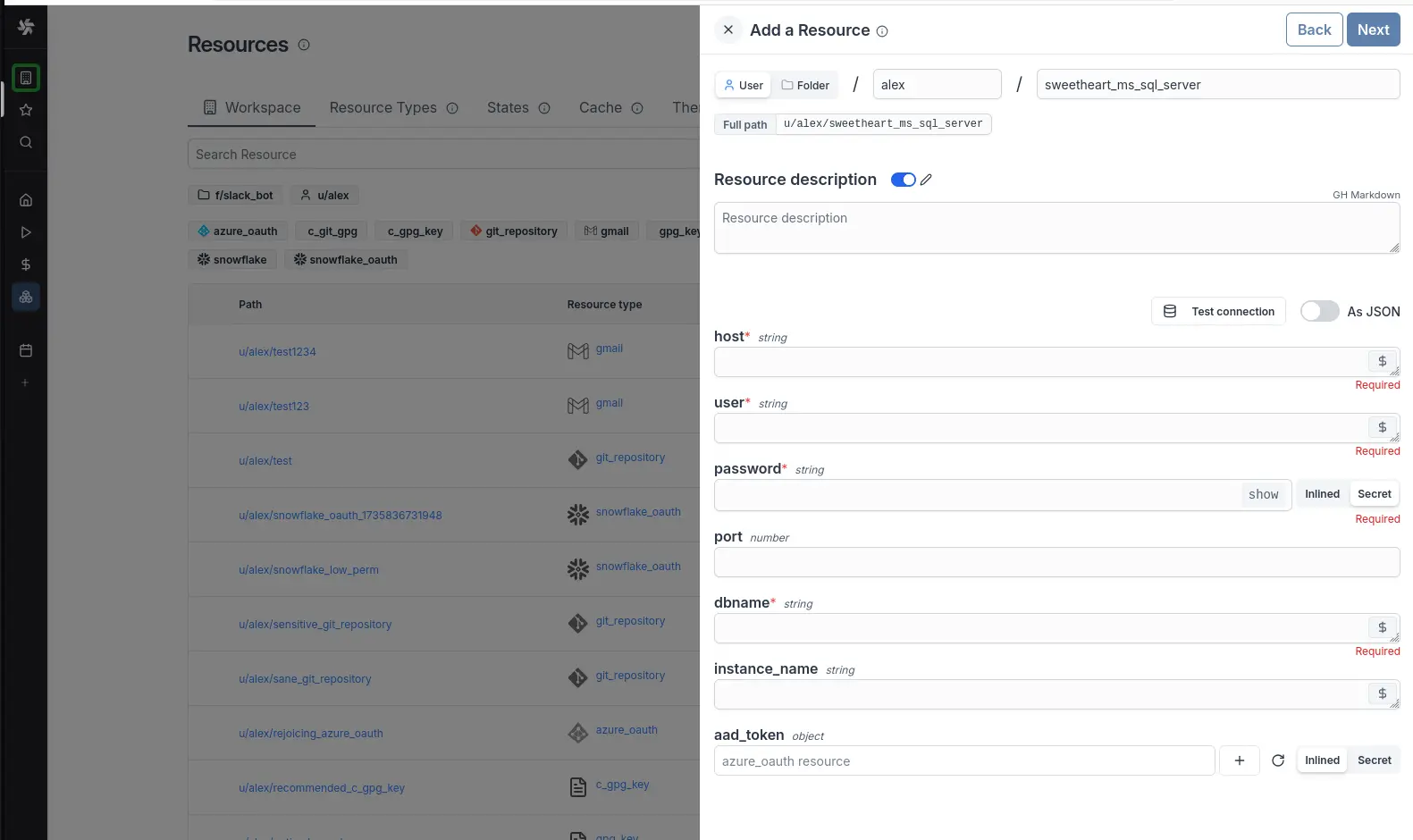

MS SQL

To be able to connect to a Microsoft SQL Server instance, we'll need to define a Resource with the ms_sql_server Resource Type first.

Head to the Resources page, click on

"Add resource" in the top right corner and select the ms_sql_server type.

Fill out the form with the information of your MS SQL instance and "Test connection" if needed.

| Property | Type | Description | Default | Required | Where to Find |

|---|---|---|---|---|---|

| host | string | Instance host | true | Your hosting provider's control panel or in your server's MS SQL configuration file | |

| port | number | Instance port | false | Your hosting provider's control panel or in your server's MS SQL configuration file | |

| user | string | Username | true | Created in MS SQL (e.g., via SQL Server Management Studio) or provided by your hosting | |

| dbname | string | Database name | true | Created in MS SQL (e.g., via SQL Server Management Studio) or provided by your hosting | |

| password | string | User's password | true | Created in MS SQL (e.g., via SQL Server Management Studio) or provided by your hosting | |

| aad_token | object | OAuth token AD | true | Either username/password or aad_token should be provided. Requires OAuth setup in Windmill | |

| trust_cert | bool | Trust server certificate | true | false | If true, the server certificate will be trusted even if it is not signed by a trusted authority |

| ca_cert | string | CA certificate | false | CA certificate to verify the server certificate. More information on MS SQL certificates |

To specify the application intent for read-only requests, add -- ApplicationIntent=ReadOnly to the script.

When using domain credentials via Entra (Azure Active Directory) you need to add the scope https://database.windows.net//.default to the Windmill OAuth instance setting.

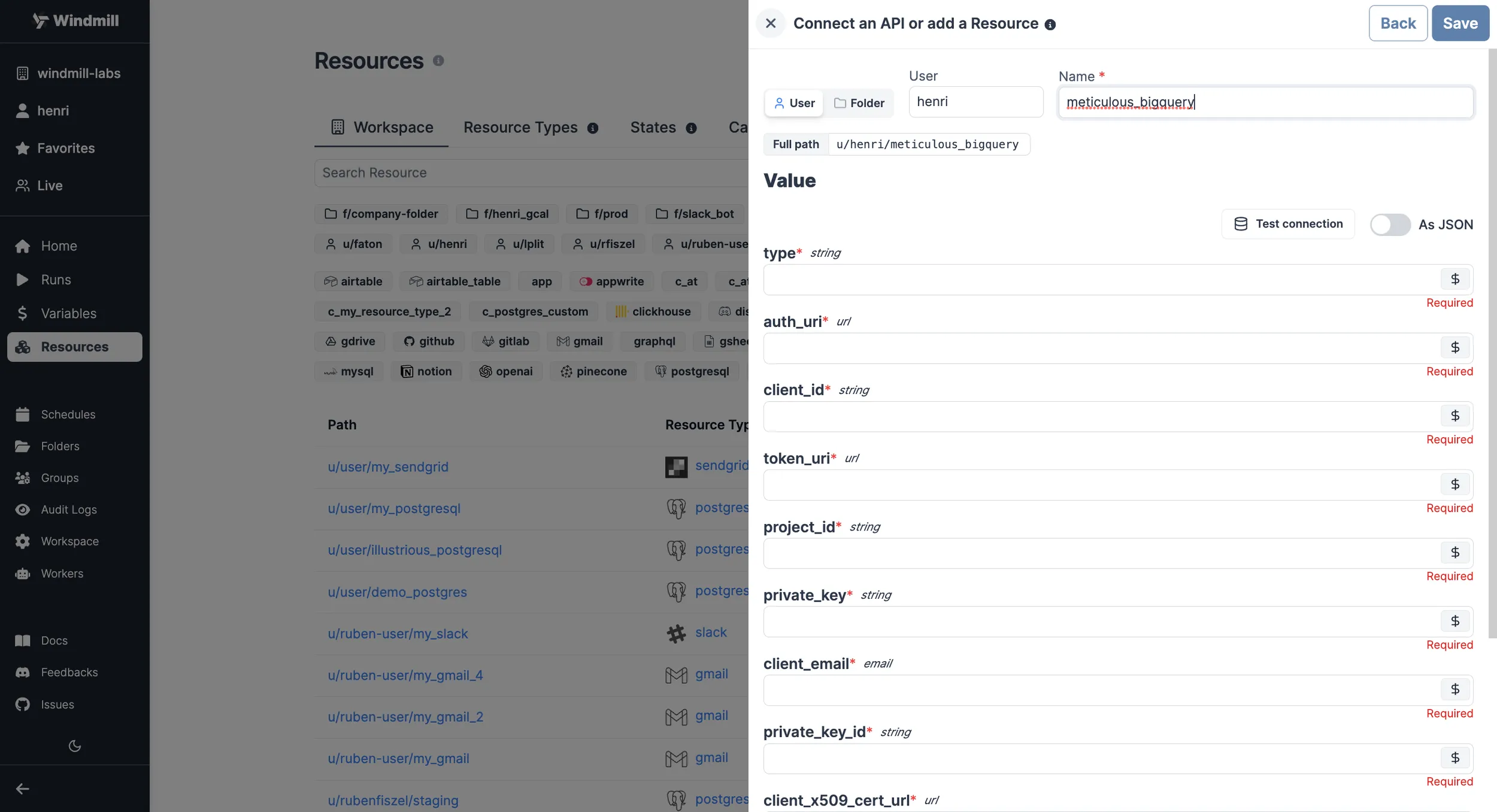

BigQuery

To be able to connect to a BigQuery instance, we'll need to define a Resource with the BigQuery Resource Type first.

Head to the Resources page, click on

"Add resource" in the top right corner and select the BigQuery type.

| Property | Type | Description | Required |

|---|---|---|---|

| auth_provider_x509_cert_url | string | Auth provider X.509 certificate URL. | false |

| client_x509_cert_url | string | Client X.509 certificate URL. | false |

| private_key_id | string | ID of the private key used for authentication. | false |

| client_email | string | Email associated with the service account. | false |

| private_key | string | Private key used for authentication. | false |

| project_id | string | Google Cloud project ID. | true |

| token_uri | string | OAuth 2.0 token URI. | false |

| client_id | string | Client ID used for OAuth 2.0 authentication. | false |

| auth_uri | string | OAuth 2.0 authorization URI. | false |

| type | string | Type of the authentication method. | false |

Here's a step-by-step guide on where to find each detail.

-

Service account creation:

- Go to the Google Cloud Console.

- Select the appropriate project from the top menu.

- In the left navigation pane, go to "IAM & Admin" > "Service accounts".

- Click on the "+ CREATE SERVICE ACCOUNT" button.

- Provide a name and optional description for the service account.

- Click "Create".

-

Assign roles:

- After creating the service account, you'll be prompted to grant roles to it. Select "BigQuery" roles such as "BigQuery Admin" or "BigQuery Data Editor" based on your needs.

- Click "Continue" and "Done" to create the service account.

-

Generate key:

- In the "Service accounts" section, find the newly created service account in the list.

- Click on the three dots on the right and select "Manage keys", then "Add Key".

- Choose the key type as "JSON" and click "Create".

-

Properties Details:

Once you've generated the key, the downloaded JSON file will contain all the required properties.

You can directly "Test connection" if needed.

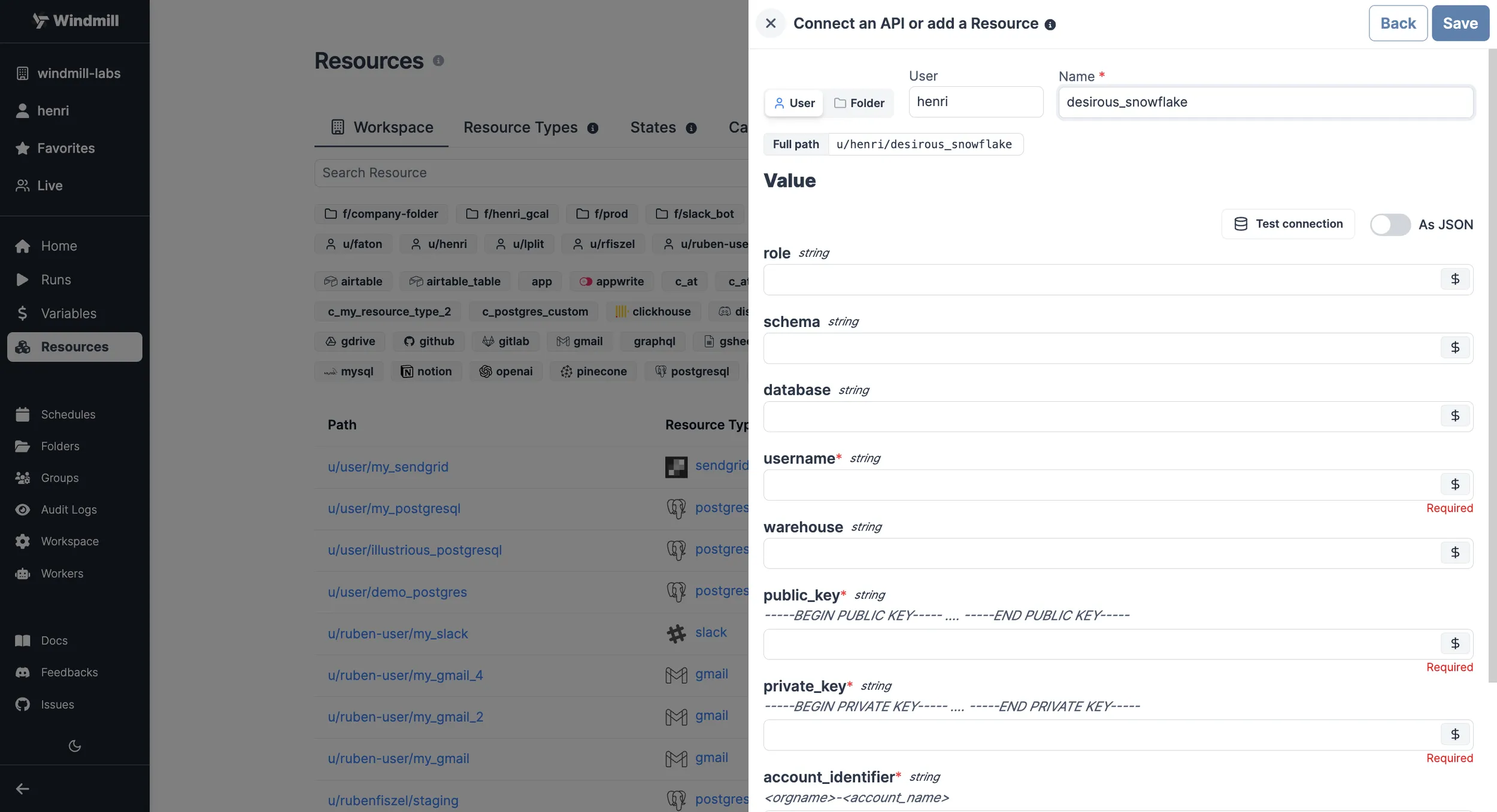

Snowflake

To be able to connect to Snowflake, you can choose to either setup OAuth for Snowflake or by defining a Snowflake Resource.

If a Snowflake OAuth connection is present, you can create a new Resource by heading to Resources, clicking on "Add Resource" in the top right corner and selecting snowflake_oauth. Take a look at this guide to learn more about how to build an App with Snowflake OAuth integration.

If you do not wish to use OAuth, click on "Add Resource" in the top right corner and select the Snowflake type instead.

| Property | Type | Description | Required |

|---|---|---|---|

| account_identifier | string | Snowflake account identifier in the format <orgname>-<account_name>. | true |

| private_key | string | Private key used for authentication. | true |

| public_key | string | Public key used for authentication. | true |

| warehouse | string | Snowflake warehouse to be used for queries. | false |

| username | string | Username for Snowflake login. | true |

| database | string | Name of the Snowflake database to connect to. | true |

| schema | string | Schema within the Snowflake database. | false |

| role | string | Role to be assumed upon connection. | false |

Here's a step-by-step guide on where to find each detail.

-

Account identifier:

The account identifier typically follows the format:

<orgname>-<account_name>. You can find it in the Snowflake web interface:- Log in to your Snowflake account.

- The account identifier can often be found in the URL or at the top of the Snowflake interface after you log in (in the format

https://app.snowflake.com/orgname/account_name/).

-

Username:

The username is the Snowflake user you will use to connect to the database. You will need to create a user if you don't have one:

- In the Snowflake web interface, go to the "ACCOUNT" tab.

- Select "Users" from the left navigation pane.

- Click the "+ CREATE USER" button to create a new user with a username and password.

-

Public key and private key:

To create the public and private keys, you will need to generate them using a tool like OpenSSL:

- Open a terminal window.

- Use OpenSSL to generate a public and private key pair. The exact commands may vary based on your operating system.

- For example, to generate a public key:

openssl rsa -pubout -in private_key.pem -out public_key.pem

Once you have the keys, you can copy the content and paste them into the respective fields in your configuration.

Snowflake Documentation on Key Pair Authentication & Key Pair Rotation

-

Warehouse, schema, database, and role:

These parameters are specific to your Snowflake environment and will depend on how your Snowflake instance is configured:

warehouse: The name of the Snowflake warehouse you want to connect to.schema: The name of the Snowflake schema you want to use.database: The name of the Snowflake database you want to connect to.role: The role you want to use for authentication.

You can find these details in the Snowflake web interface:

- Log in to your Snowflake account.

- You can find the names of warehouses, schemas, databases, and roles in the interface or by running SQL queries.

You can directly "Test connection" if needed.

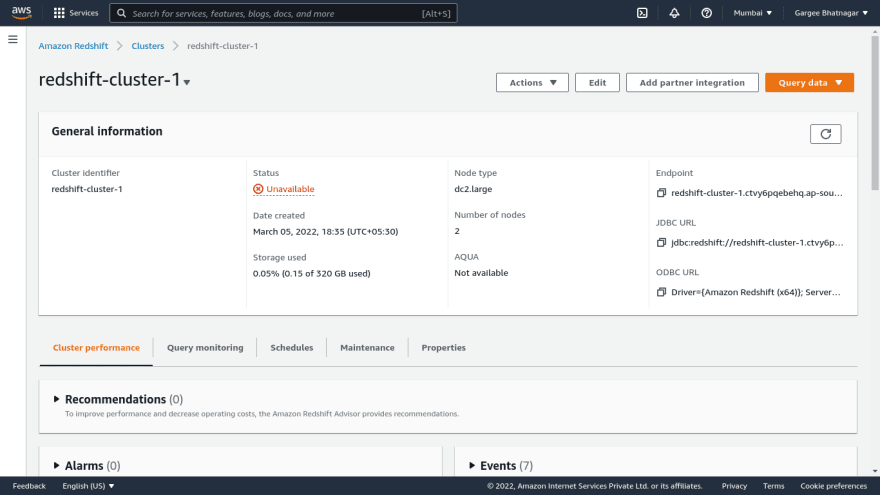

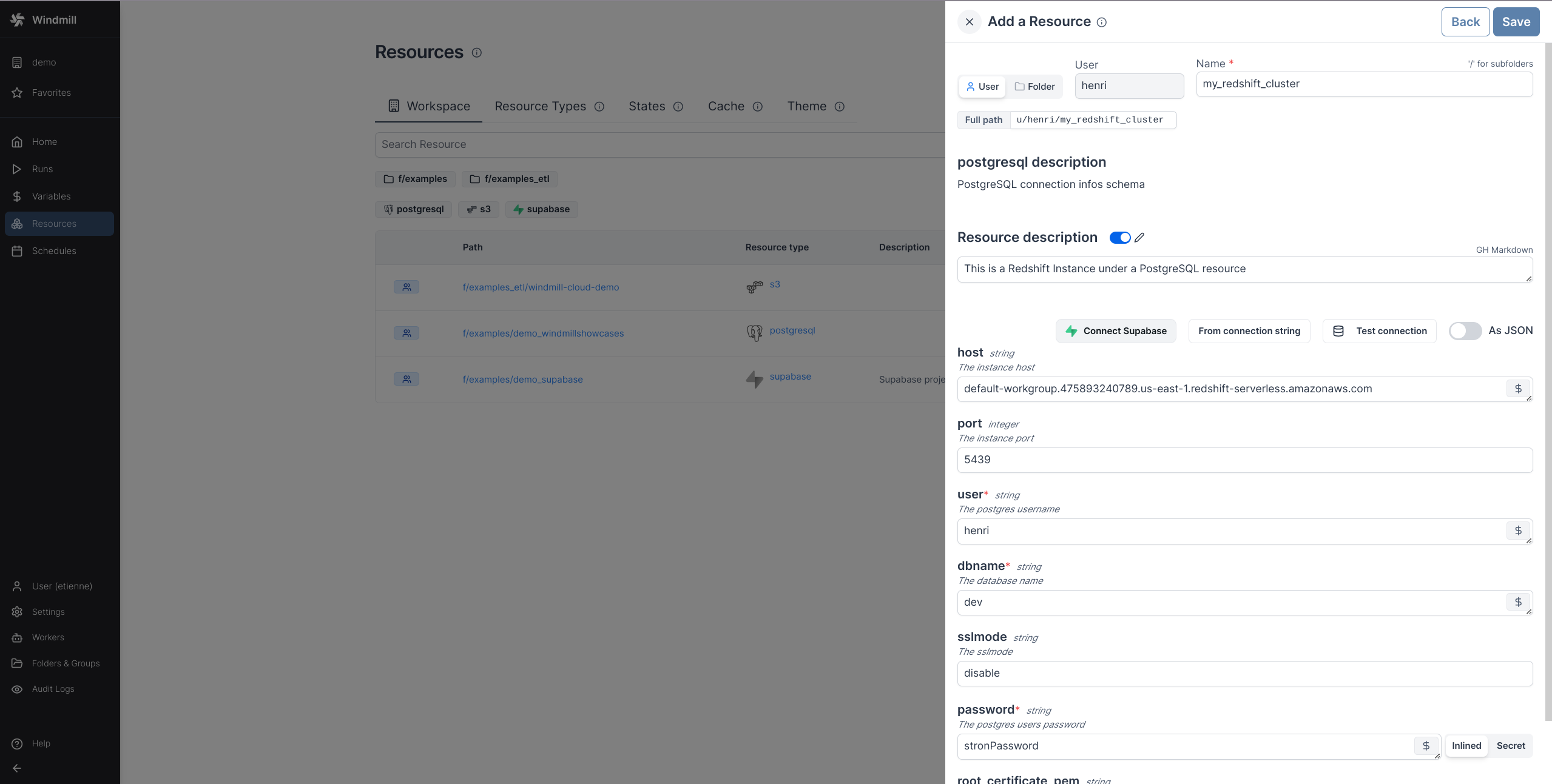

Amazon Redshift

To connect to an Amazon Redshift instance, we need to add the corresponding resource. Redshift is compatible with Windmill's PostgreSQL resources and scripts, so we'll start by adding a new PostgrSQL resource type.

Get the required values from the AWS console under CLUSTERS > your Redshift cluster.

Find the value named 'endpoint' it should look like this:

default-workgroup.475893240789.us-east-1.redshift-serverless.amazonaws.com:5439/dev

From there you can deduce your host, port and database name:

- host: default-workgroup.475893240789.us-east-1.redshift-serverless.amazonaws.com

- port: 5439

- dbname: dev

Now you can fill those values in Windmill, fill also the user and password for the db and press "Test connection" to check that it's working.

Once it's working press save and you have succesfully added your Redshift instance as a PostgreSQL resource!

Oracle

To be able to connect to an Oracle database, you need to define an Oracle resource.

Head to the Resources page, click on

"Add resource" in the top right corner and select the Oracle type.

| Property | Type | Description | Required |

|---|---|---|---|

| database | string | Database name | true |

| user | string | Username | true |

| password | string | User's password | true |

Here's a step-by-step guide on where to find each detail.

-

Database: The name of the Oracle database you want to connect to. This can be found in your Oracle database configuration or by consulting your database administrator.

-

Username: The username for Oracle login. You will need to create a user if you don't have one:

- In the Oracle database interface, go to the "Users" section.

- Create a new user with a username and password.

-

Password: The password associated with the Oracle username.

You can directly "Test connection" if needed.

DuckDB

DuckDB scripts run in-memory out-of-the-box.

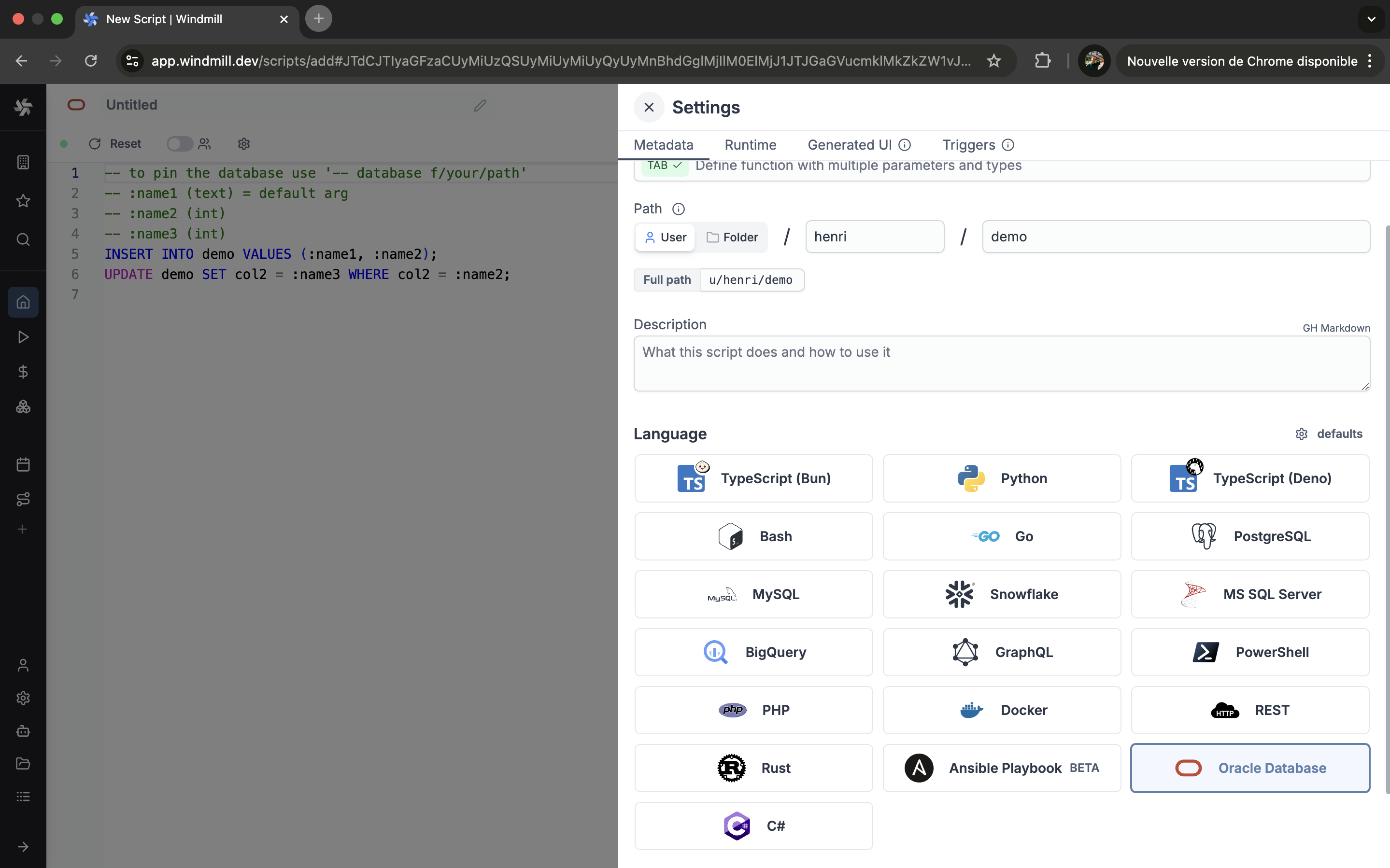

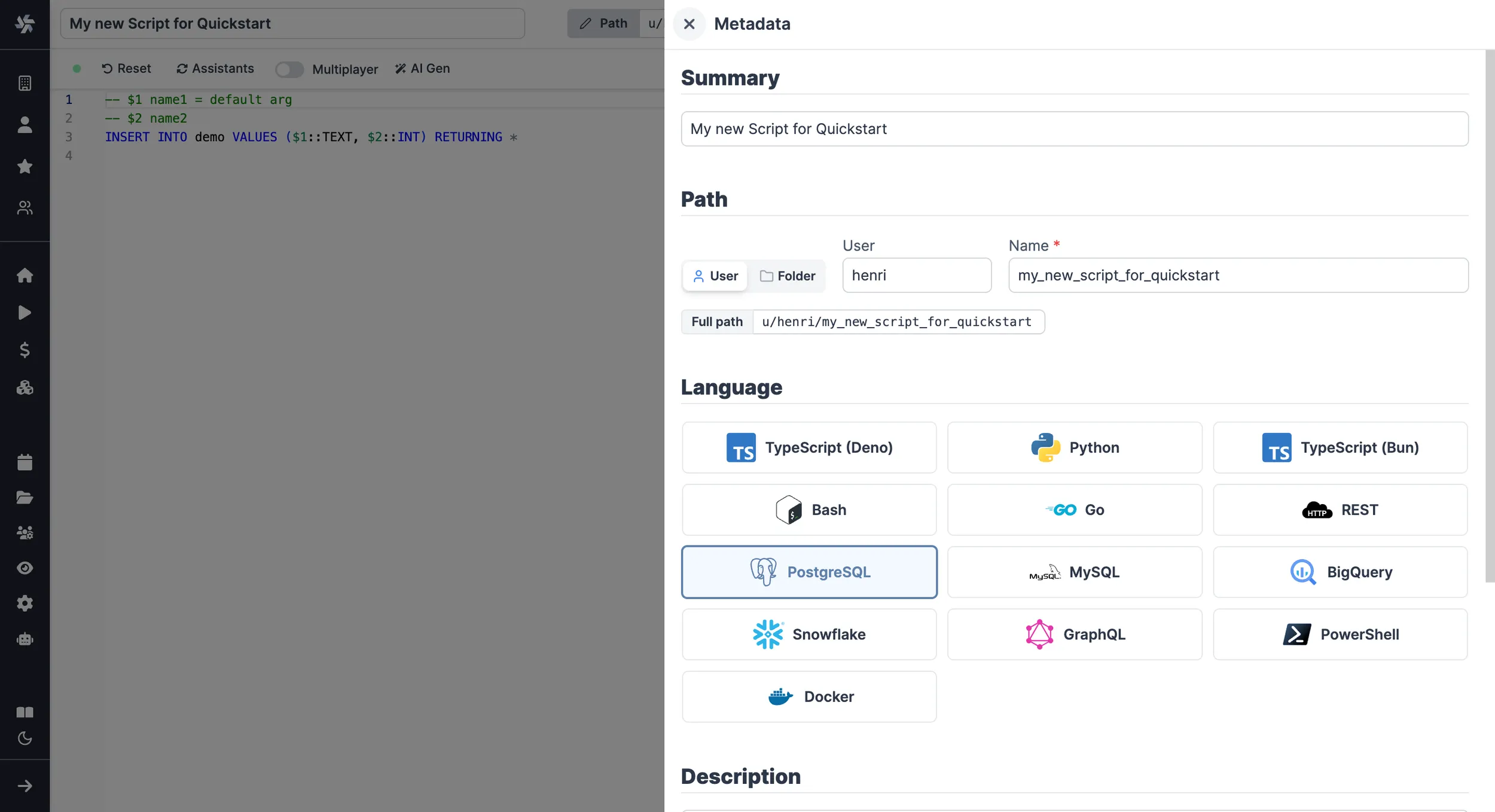

Create script

Next, let's create a script that will use the newly created Resource. From the Home page, click on the "+Script" button. Name the Script, give it a summary, and select your preferred language, PostgreSQL, MySQL, MS SQL, BigQuery, Snowflake.

You can also give more details to your script, in the settings section, you can also get back to that later at any point.

PostgreSQL

Arguments need to be passed in the given format:

-- $1 name1 = default arg

-- $2 name2

INSERT INTO demo VALUES ($1::TEXT, $2::INT) RETURNING *

"name1", "name2" being the names of the arguments, and "default arg" the optional default value.

Database resource can be specified from the UI or directly within script with a line -- database resource_path.

You can then write your prepared statement.

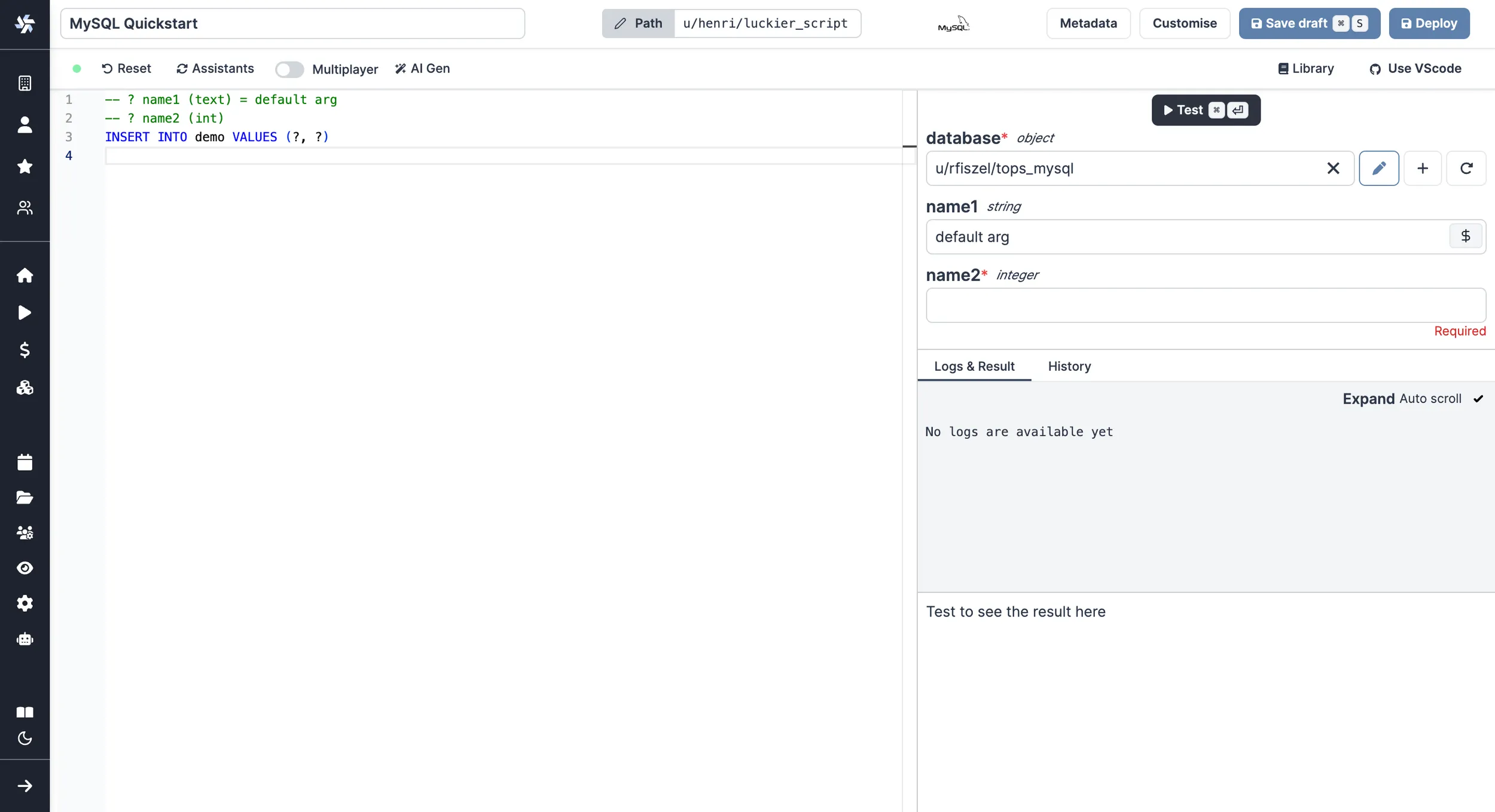

MySQL

Arguments need to be passed in the given format:

-- :name1 (text) = default arg

-- :name2 (int)

INSERT INTO demo VALUES (:name1, :name2)

"name1", "name2" being the names of the arguments, and "default arg" the optional default value.

Database resource can be specified from the UI or directly within script with a line -- database resource_path.

You can then write your prepared statement.

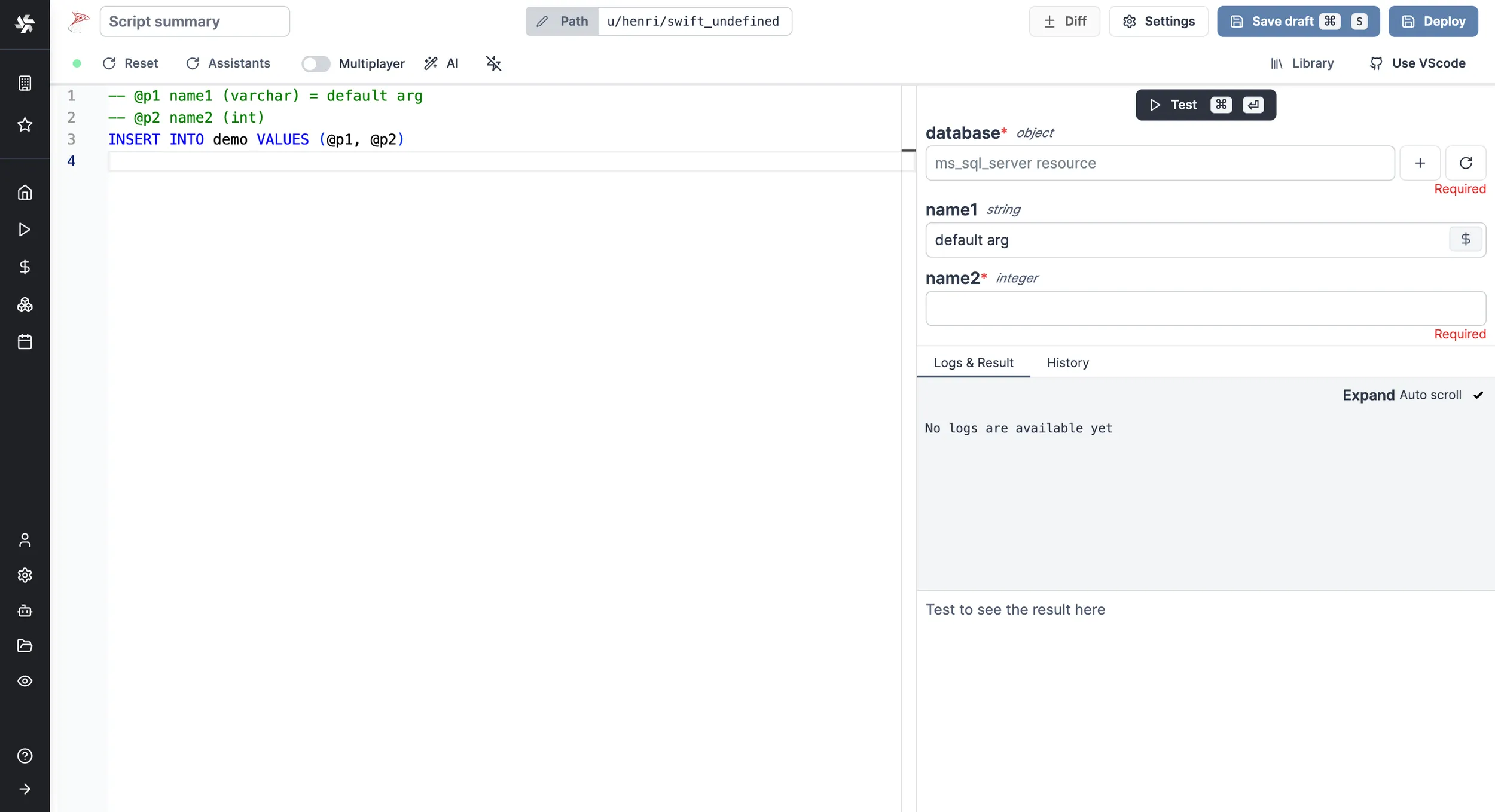

MS SQL

Arguments need to be passed in the given format:

-- @P1 name1 (varchar) = default arg

-- @P2 name2 (int)

INSERT INTO demo VALUES (@P1, @P2)

"name1", "name2" being the names of the arguments, and "default arg" the optional default value.

Database resource can be specified from the UI or directly within script with a line -- database resource_path.

You can then write your prepared statement.

BigQuery

Arguments need to be passed in the given format:

-- @name1 (string) = default arg

-- @name2 (integer)

-- @name3 (string[])

INSERT INTO `demodb.demo` VALUES (@name1, @name2, @name3)

"name1", "name2", "name3" being the names of the arguments, "default arg" the optional default value and string, integer and string[] the types.

Database resource can be specified from the UI or directly within script with a line -- database resource_path.

You can then write your prepared statement.

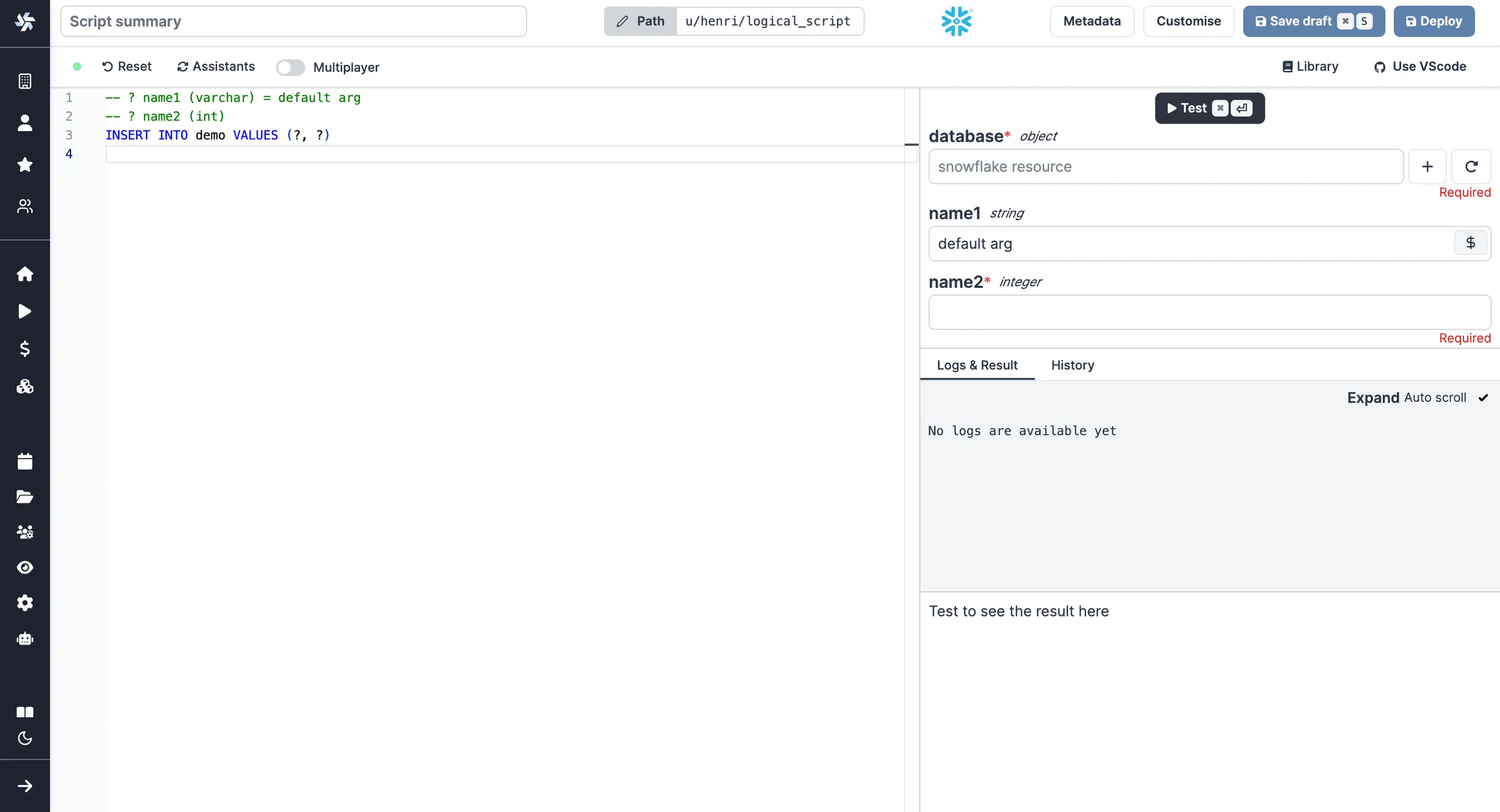

Snowflake

Arguments need to be passed in the given format:

-- ? name1 (varchar) = default arg

-- ? name2 (int)

INSERT INTO demo VALUES (?, ?)

"name1", "name2" being the names of the arguments, "default arg" the optional default value and varchar & int the types.

Database resource can be specified from the UI or directly within script with a line -- database resource_path.

You can then write your prepared statement.

Amazon Redshift

Since Redshift is compatible with Windmill's PostgreSQL, you can follow the same instructions as for PostgreSQL scripts. Make sure to select your Redshift instance as a resource.

Remeber when using a a Redshift resource, you should write valid Redshift, and not PostgreSQL. For example the RETURNING * syntax is not supported, so you may want to change the default script to something like:

-- $1 name1 = default arg

-- $2 name2

INSERT INTO demo VALUES ($1::TEXT, $2::INT)

Learn more about the differences here.

Oracle

Arguments need to be passed in the given format:

-- database f/your/path

-- :name1 (text) = default arg

-- :name2 (int)

-- :name3 (int)

INSERT INTO demo VALUES (:name1, :name2);

UPDATE demo SET col2 = :name3 WHERE col2 = :name2;

"name1", "name2", "name3" being the names of the arguments, and "default arg" the optional default value.

DuckDB

DuckDB arguments need to be passed in the given format:

-- $name1 (text) = default arg

-- $name2 (int)

INSERT INTO demo VALUES ($name1, $name2)

"name1", "name2" being the names of the arguments, and "default arg" the optional default value.

You can pass a file on S3 as an argument of type s3object. This will substitute it with the correct 's3:///...' path at runtime. You can then query this file using the standard read_csv/read_parquet/read_json functions :

-- $file (s3object)

SELECT * FROM read_parquet($file)

Alternatively, you can reference files on the workspace directly using s3:// notation.

For primary workspace storage:

SELECT * FROM read_parquet('s3:///path/to/file.parquet')

For secondary storage:

SELECT * FROM read_parquet('s3://<secondary_storage>/path/to/file.parquet')

This notation also works with glob patterns:

SELECT * FROM read_parquet('s3:///myfiles/*.parquet')

The s3:// notation now uses the Windmill S3 Proxy by default.

You can also attach to other database resources (BigQuery, PostgreSQL and MySQL). We use the official and community DuckDB extensions under the hood :

ATTACH '$res:u/demo/amazed_postgresql' AS db (TYPE postgres);

SELECT * FROM db.public.friends;

Database resource can be specified from the UI or directly within the script with a line -- database resource_path.

You can then write your prepared statement.

Raw queries

Safe interpolated arguments

To allow more flexibility than with prepared statements, Windmill offers the possibility to do safe string interpolation in your queries thanks to backend schema validation. This allows you to use script parameters for elements you would usually not be able to, such as table or column names. In order to avoid SQL injections however, these parameters are checked at runtime and the job will fail if any of these rules is not followed:

- The parameter is a non-empty string.

- The characters are all either alphabetical (ASCII only), numeric, or an underscore (

_). Meaning no whitespace or symbol is allowed. - The string does not start with a number.

- If the parameter is an enum, it must be one of the defined variants.

These rules are strict enough to protect from any kind of unexpected injection, but lenient enough to have some powerful use cases. Let's look at an example:

-- :daily_minimum_calories (int)

-- %%table_name%% fruits/vegetables/cereals

SELECT name, calories FROM %%table_name%% WHERE calories > daily_minimum_calories

In this example the argument table_name is defined as a string that can be either "fruits", "vegetables" or "cereals", and the user of the script can then choose which table to query by setting this argument. If the user of the script tries to query a different table, the job will fail before making a connection to the DB, and thus protecting potentially sensitive data.

It the enum variants are ommited, the field is considered to be a regular string and only the other rules apply:

-- :daily_minimum_calories (int)

-- %%table_name%%

SELECT name, calories FROM %%table_name%% WHERE calories > daily_minimum_calories

Keep in mind that this means users of this script can try this query against all existant and non-existant tables of the database.

Unsafe interpolation on a REST script

A more convenient but less secure option is to execute raw queries with a TypeScript, Deno or Python client. You can for instance do string interpolation to make the name of the table a parameter of your script: SELECT * FROM ${table}. However this is dangerous since the string is directly interpolated and this open the door for SQL injections. Use with care and only in trusted environment.

PostgreSQL

- TypeScript (Bun)

- TypeScript (Deno)

- Python

import * as wmill from 'windmill-client';

import { Client } from 'pg';

// Define the resource type as specified

type Postgresql = {

host: string,

port: number,

user: string,

dbname: string,

sslmode: string,

password: string,

root_certificate_pem: string

}

// The main function that will execute a query on a Postgresql database

export async function main(query = 'SELECT * FROM demo', pg_resource: Postgresql) {

// Initialize the PostgreSQL client with SSL configuration disabled for strict certificate validation

const client = new Client({

host: pg_resource.host,

port: pg_resource.port,

user: pg_resource.user,

password: pg_resource.password,

database: pg_resource.dbname,

ssl: pg_resource.ssl,

});

try {

// Connect to the database

await client.connect();

// Execute the query

const res = await client.query(query);

// Close the connection

await client.end();

// Return the query result

return res.rows;

} catch (error) {

console.error('Database query failed:', error);

// Rethrow the error to handle it outside or log it appropriately

throw error;

}

}

View script on Windmill Hub.

import {

type Sql,

} from "https://deno.land/x/[email protected]/mod.ts";

import { Client } from "https://deno.land/x/[email protected]/mod.ts"

type Postgresql = {

host: string;

port: number;

user: string;

dbname: string;

sslmode: string;

password: string;

};

export async function main(db: Postgresql, query: Sql = "SELECT * FROM demo;") {

if (!query) {

throw Error("Query must not be empty.");

}

const { rows } = await pgClient(db).queryObject(query);

return rows;

}

export function pgClient(db: any) {

let db2 = {

...db,

hostname: db.host,

database: db.dbname,

tls: {

enabled: false,

},

}

return new Client(db2)

}

View script on Windmill Hub.

from typing import TypedDict, Dict, Any

import psycopg2

# Define the PostgreSQL resource type as specified

class postgresql(TypedDict):

host: str

port: int

user: str

dbname: str

sslmode: str

password: str

root_certificate_pem: str

def main(query: str, db_config: postgresql) -> Dict[str, Any]:

# Connect to the PostgreSQL database

conn = psycopg2.connect(

host=db_config["host"],

port=db_config["port"],

user=db_config["user"],

password=db_config["password"],

dbname=db_config["dbname"],

sslmode=db_config["sslmode"],

sslrootcert=db_config["root_certificate_pem"],

)

# Create a cursor object

cur = conn.cursor()

# Execute the query

cur.execute(query)

# Fetch all rows from the last executed statement

rows = cur.fetchall()

# Close the cursor and connection

cur.close()

conn.close()

# Convert the rows to a list of dictionaries to make it more readable

columns = [desc[0] for desc in cur.description]

result = [dict(zip(columns, row)) for row in rows]

return result

View script on Windmill Hub.

You can find more Script examples related to PostgreSQL on Windmill Hub.

MySQL

The same logic goes for MySQL.

- TypeScript (Bun)

- TypeScript (Deno)

- Python

import { createConnection } from 'mysql';

// Define the Mysql resource type as specified

type Mysql = {

ssl: boolean,

host: string,

port: number,

user: string,

database: string,

password: string

}

// The main function that will execute a query on a Mysql resource

export async function main(mysqlResource: Mysql, query: string): Promise<any> {

// Create a promise to handle the MySQL connection and query execution

return new Promise((resolve, reject) => {

// Create a connection to the MySQL database using the resource credentials

const connection = createConnection({

host: mysqlResource.host,

port: mysqlResource.port,

user: mysqlResource.user,

password: mysqlResource.password,

database: mysqlResource.database,

ssl: mysqlResource.ssl

});

// Connect to the MySQL database

connection.connect(err => {

if (err) {

reject(err);

return;

}

// Execute the query provided as a parameter

connection.query(query, (error, results) => {

// Close the connection after the query execution

connection.end();

if (error) {

reject(error);

} else {

resolve(results);

}

});

});

});

}

View script on Windmill Hub.

import { createPool as createMysqlPool } from "npm:mysql2/promise";

// Define the MySQL resource type as specified

type Mysql = {

ssl: boolean,

host: string,

port: number,

user: string,

database: string,

password: string

}

// The main function that executes a query on a MySQL database

export async function main(

mysqlResource: Mysql,

query: string,

): Promise<any> {

// Adjust the SSL configuration based on the mysqlResource.ssl value

const sslConfig = mysqlResource.ssl ? { rejectUnauthorized: true } : false;

// Create a new connection pool using the provided MySQL resource

const pool = createMysqlPool({

host: mysqlResource.host,

user: mysqlResource.user,

database: mysqlResource.database,

password: mysqlResource.password,

port: mysqlResource.port,

// Use the adjusted SSL configuration

ssl: sslConfig,

waitForConnections: true,

connectionLimit: 10,

queueLimit: 0,

});

try {

// Get a connection from the pool and execute the query

const [rows] = await pool.query(query);

return rows;

} catch (error) {

// If an error occurs, throw it to be handled by the caller

throw new Error(`Failed to execute query: ${error}`);

} finally {

// Always close the pool after the operation is complete

await pool.end();

}

}

View script on Windmill Hub.

from typing import TypedDict

import mysql.connector as mysql_connector

# Define the MySQL resource type

class mysql(TypedDict):

ssl: bool

host: str

port: float

user: str

database: str

password: str

def main(mysql_credentials: mysql, query; str) -> str:

# Connect to the MySQL database using the provided credentials

connection = mysql_connector.connect(

host=mysql_credentials["host"],

user=mysql_credentials["user"],

password=mysql_credentials["password"],

database=mysql_credentials["database"],

port=int(mysql_credentials["port"]),

ssl_disabled=not mysql_credentials["ssl"],

)

# Create a cursor object

cursor = connection.cursor()

# Execute the query

cursor.execute(query)

# Fetch one result

result = cursor.fetchone()

# Close the cursor and connection

cursor.close()

connection.close()

# Return the result

return str(result[0])

View script on Windmill Hub.

And so on for MS SQL, BigQuery and Snowflake.

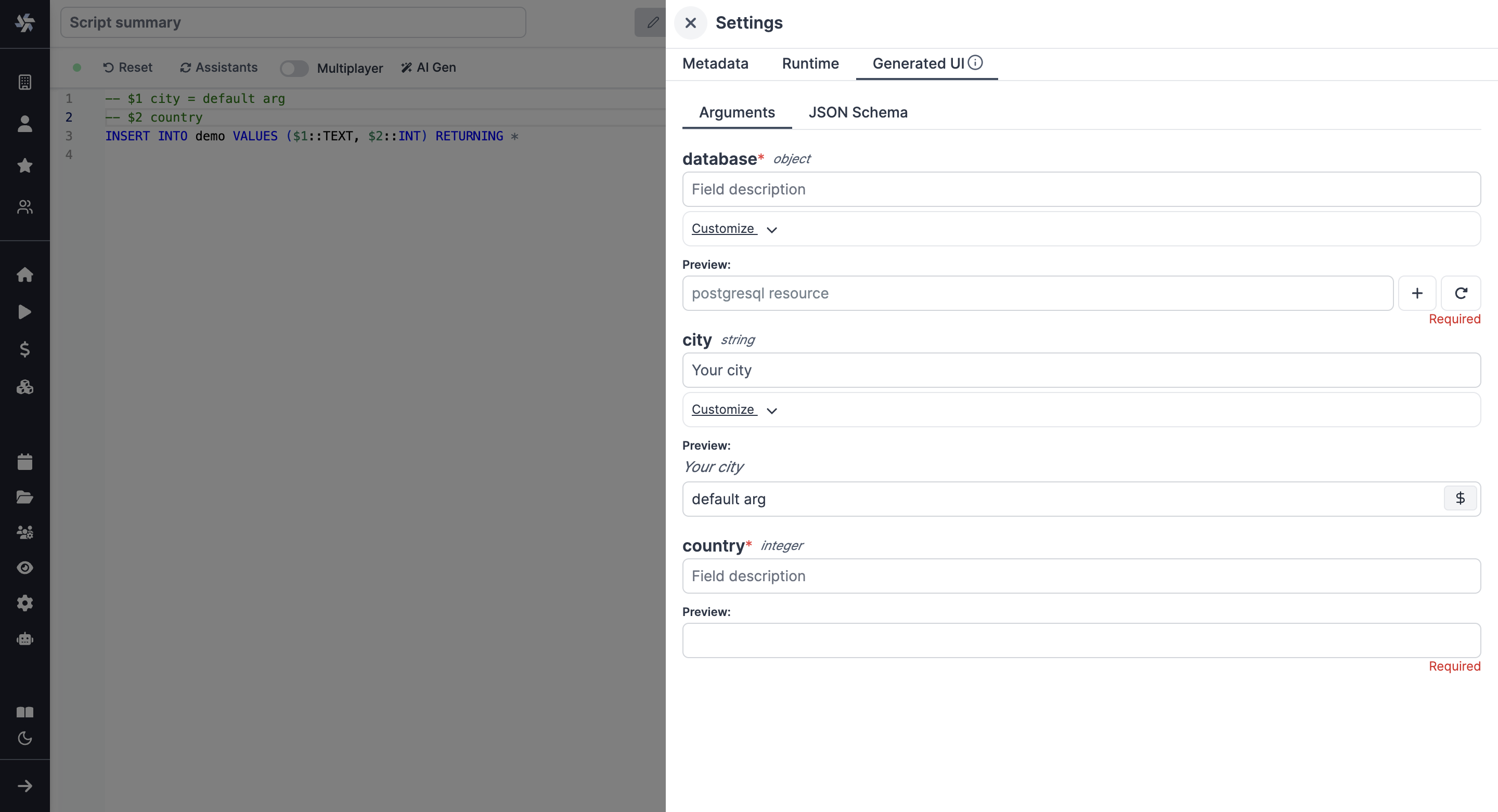

Customize your script

After you're done, click on "Deploy", which will save it to your workspace. You can now use this Script in your Flows, app or as standalone.

Feel free to customize your script's metadata (path, name, description), runtime (concurrency limits, worker group, cache, dedicated workers) and generated UI.

What's next?

Those scripts are minimal working examples, but there's a few more steps that can be useful in a real-world use case:

- Pass variables and secrets to a script.

- Connect to resources.

- Trigger that script in many ways.

- Compose scripts in Flows or Apps (in particular, Database studio to visualize and manage your databases in apps).

- You can share your scripts with the community on Windmill Hub. Once submitted, they will be verified by moderators before becoming available to everyone right within Windmill.

Scripts are immutable and there is a hash for each deployment of a given script. Scripts are never overwritten and referring to a script by path is referring to the latest deployed hash at that path.

For each script, a UI is autogenerated from the jsonchema inferred from the script signature, and can be customized further as standalone or embedded into rich UIs using the App builder.

In addition to the UI, sync and async webhooks are generated for each deployment.

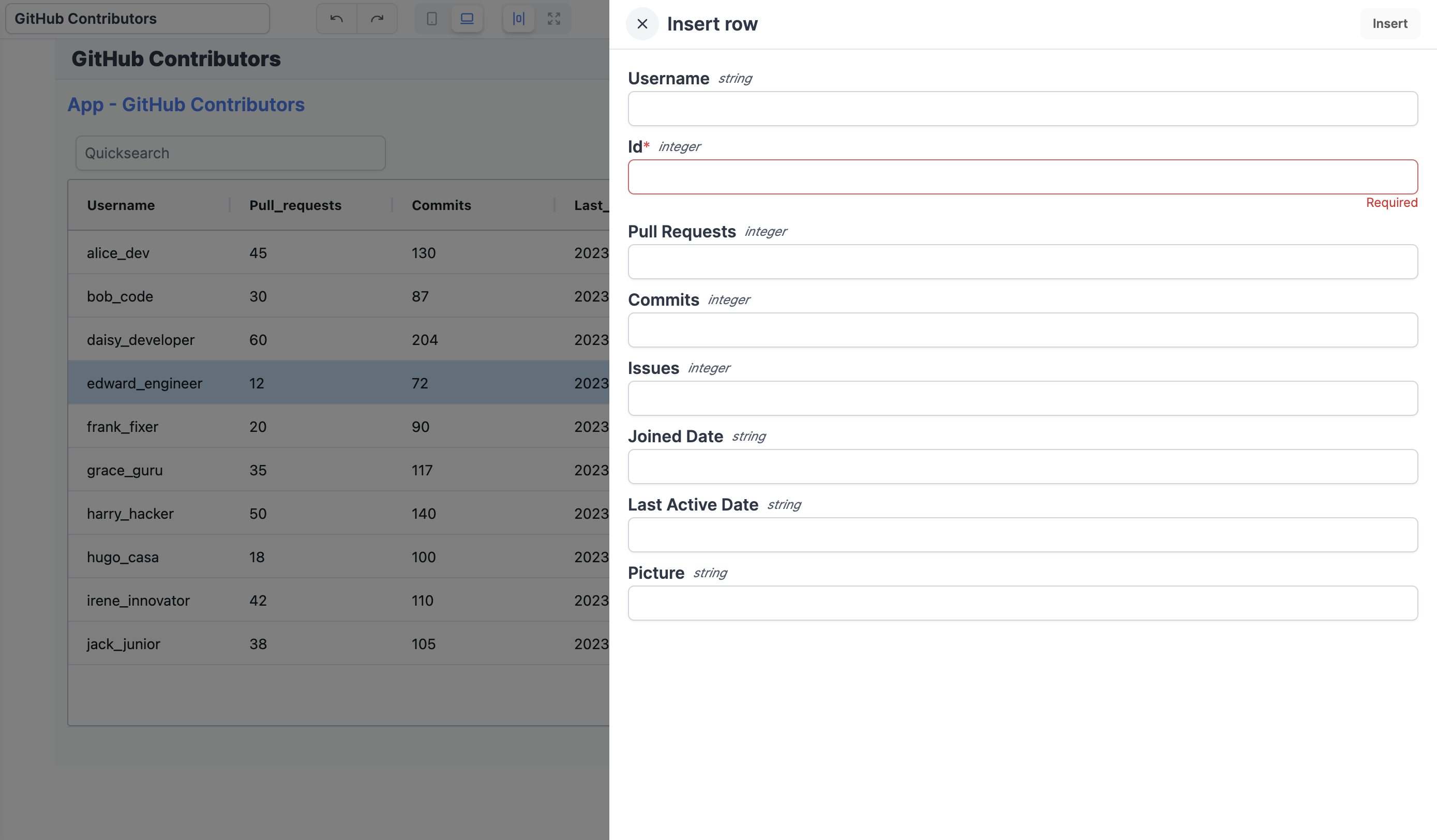

Database studio

From Windmill App editor, you can also use the Database studio component to visualize and manage your databases (PostgreSQL / MySQL / MS SQL / Snowflake / BigQuery are all supported).

The Database studio component allows you to:

- Display the content of a table.

- Edit the content of a table by directly editing the cells (only when the cell is editable).

- Add a new row.

- Delete a row.

All details at:

Streaming large query results to S3 (Enterprise feature)

Sometimes, your SQL script will return too much data which exceeds the 10 000 rows query limit within Windmill. In this case, you will want to use the s3 flag to stream your query result to a file.