Windmill AI

Windmill provides ways to have AI help you in your coding experience.

If you're rather interested in leveraging OpenAI from your scripts, flows and apps, check OpenAI Integration.

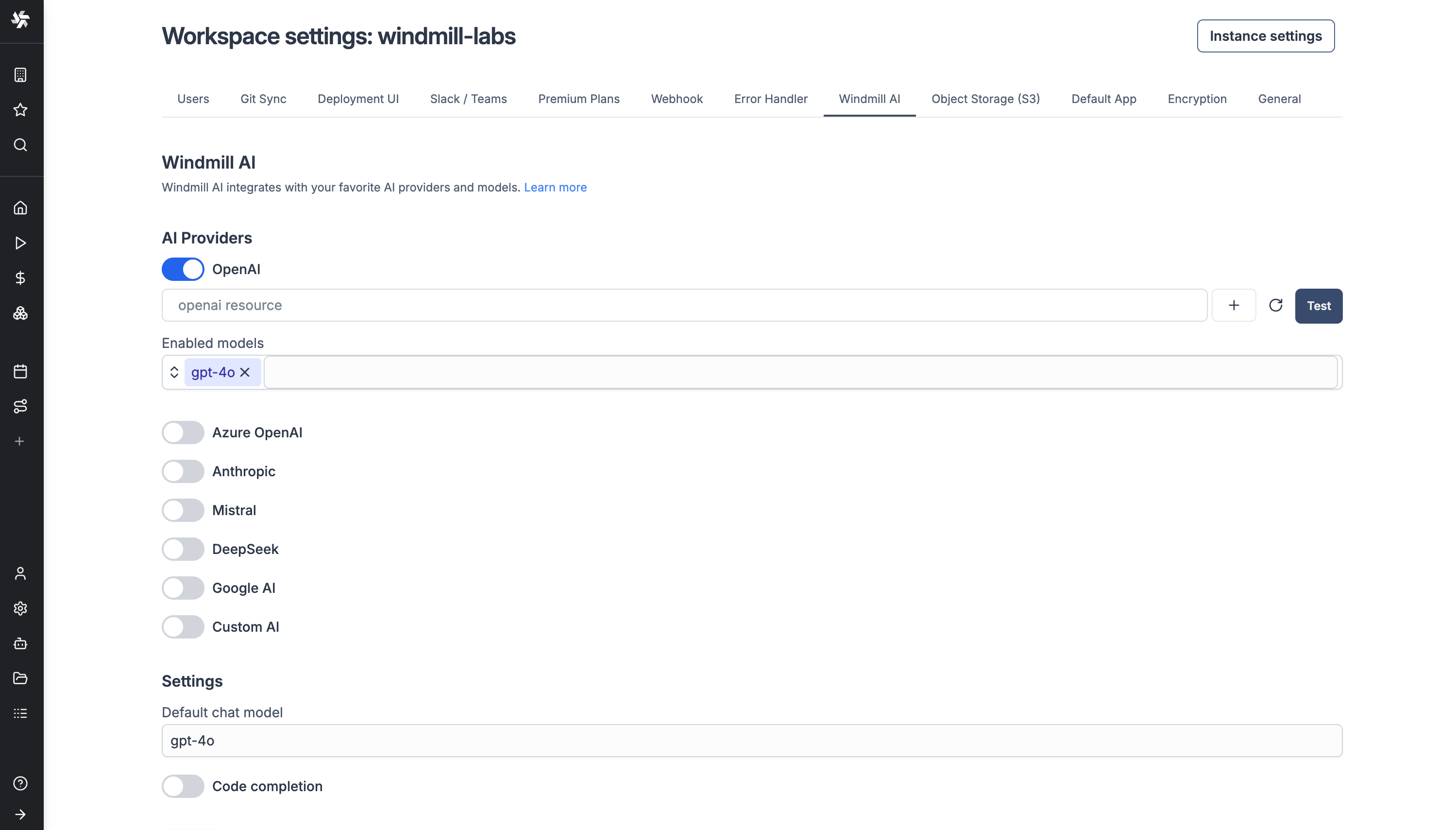

To enable Windmill AI, go to the "Windmill AI" tab in the workspace settings and add a supported model's resource. Code completion is disabled by default, but you can enable it in the same tab.

Windmill AI Chat

The AI Chat in Windmill also includes a powerful navigation mode that serves as your personal assistant for understanding and using the platform. This mode goes beyond code generation to provide comprehensive help with Windmill itself.

The navigation mode can assist you with:

- Answer questions about Windmill: Get explanations about features, concepts, and best practices for using the platform effectively.

- Navigate the app: The AI can guide you through the Windmill interface, helping you locate specific features, settings, and tools you need.

- Provide documentation links: When you need more detailed information, the AI will direct you to relevant sections of this documentation for deeper learning.

- Fetch API information: The AI can retrieve and explain relevant API details to help you understand what data and options are available for your use case.

- Fill script and flow inputs: Speed up your workflow by letting the AI automatically populate form fields for scripts and flows based on context and your requirements.

- Custom form filling instructions: You can provide specific instructions to the AI on how to fill out forms in script and flow settings, making it adapt to your particular workflow needs.

This navigation mode makes Windmill more accessible by providing contextual help exactly when and where you need it, whether you're learning the platform or looking for quick assistance during development.

API mode

The AI chat also includes an API mode that allows you to perform basic operations on your Windmill workspace directly through conversation. You can interact with the following endpoints:

- jobs: Monitor, cancel, and retrieve information about running and completed jobs

- scripts: List, create, edit, and manage your scripts

- flows: Work with your flows and their configurations

- resources: Manage workspace resources and connections

- variables: Handle workspace and user variables

- schedules: Create and modify scheduled jobs

- workers: Check worker status and manage worker groups

These API endpoints are also available through the Model Context Protocol (MCP), enabling seamless integration with MCP-compatible tools and clients for automated workflow management.

Windmill AI for scripts

AI Chat

The script editor includes an integrated AI chat panel designed to assist with coding tasks directly. The assistant can generate code, identify and fix issues, suggest improvements, add documentation, and more.

You can open the AI chat panel using Cmd+L (Mac) or Ctrl+L (Windows/Linux). When you have code selected, it will automatically include the selected code in your message to the AI.

Key features:

- When the AI suggests code changes, you can apply or discard specific parts of the suggestion, rather than having to accept or reject the entire block.

- You can provide additional context to guide the AI's suggestions, such as database schemas, deployed script diffs, and runtime errors. Access the context menu by clicking the context button or typing

@directly in the chat input. - The panel includes built-in shortcuts for common tasks like fixing bugs or optimizing code.

Code completion

The script editor includes code autocomplete that provides real-time suggestions as you type. Currently, this feature requires Mistral Codestral, which excels at code completion thanks to their specialized FIM (Fill-in-Middle) endpoint.

The autocomplete feature can be enabled globally in the workspace settings. Individual users can then toggle it on or off in their personal script editor settings.

Summary copilot

From your code, the AI assistant can generate a script summary.

Legacy AI features

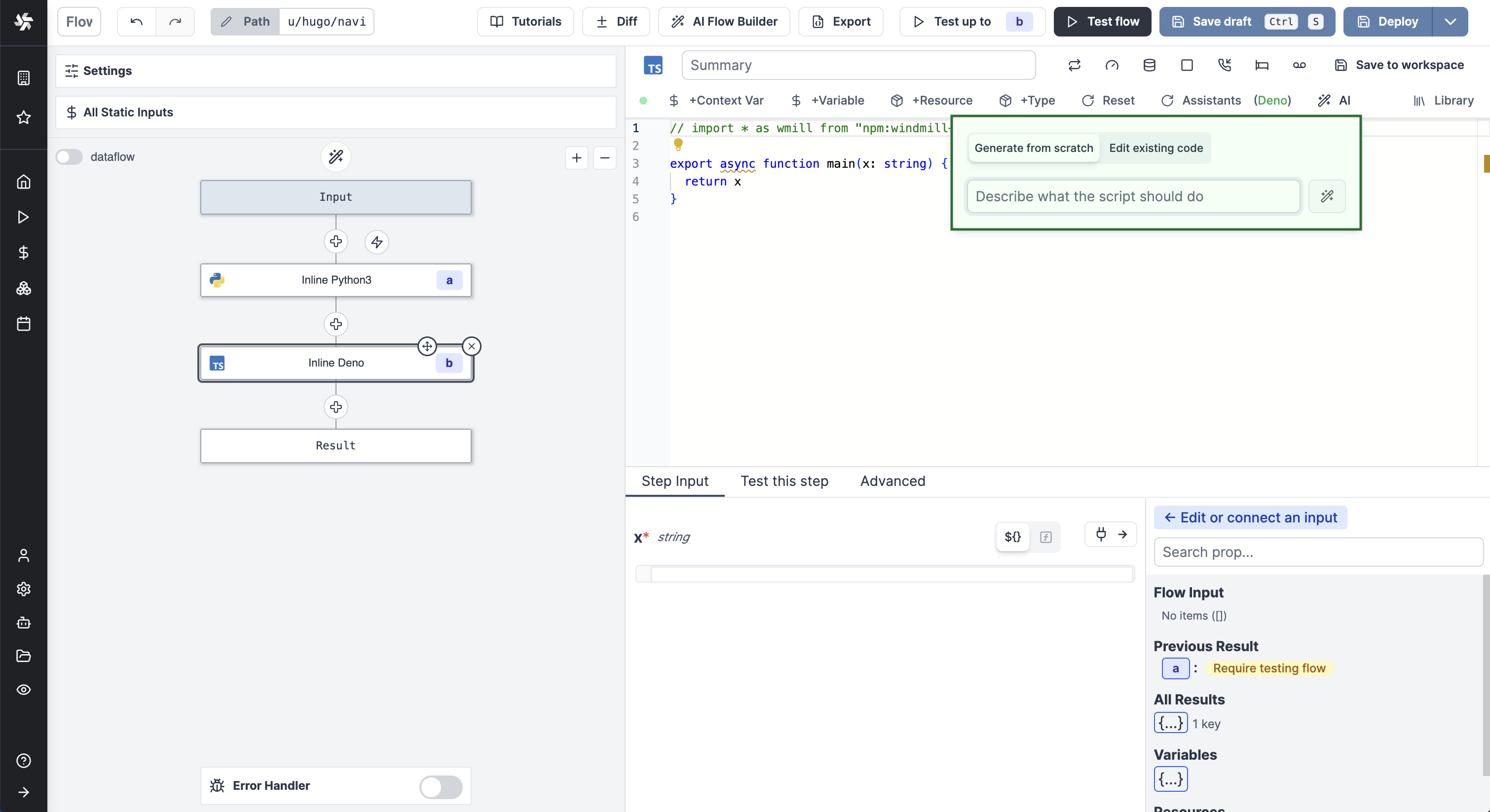

The following guides are still applicable for code editing of inline scripts inside flows and apps.

Code generation

In a code editor (Script, Flow, Apps), click on AI and write a prompt describing what the script should do. The script will follow Windmill's main requirements and features (exposing a main function, importing libraries, using resource types, declaring required parameters with types). Moreover, when creating an SQL or GraphQL script, the AI assistant will take into account the database/GraphQL API schema when selected.

The AI assistant is particularly effective when generating Python and TypeScript (Bun runtime) scripts, so we recommend using these languages when possible.

Moreover, you will get better results if you specify in the prompt what the script should take as parameters, what it should return, the specific integration and libraries it should use if any.

For instance, in the demo video above we wanted to generate a script that fetches the commits of a GitHub repository passed as a parameter, so we wrote: fetch and return the commits of a given repository on GitHub using octokit.

Code editing

Inside the AI Gen popup, you can choose to edit the existing code rather than generating a new one. The assistant will modify the code according to your prompt.

Code fixing

Upon error when executing code, you will be offered to "AI Fix" it. The assistant will automatically read the code, explain what went wrong, and suggest a way to fix it.

Windmill AI for flows

AI Flow Chat

The flow editor includes an integrated AI chat panel that lets you create and modify flows using natural language. Just describe what you want to achieve, and the AI will build the flow for you, step by step.

It can add scripts, loops, and branches, searching your workspace and Windmill Hub for relevant scripts or generating new ones when needed or on request. It automatically sets up flow inputs and connects steps by filling in parameters based on context. For loops and branches, the AI sets suitable iterator expressions and predicates based on your flow's data.

Script mode in AI Flow Chat

If you're working on an inline script in your flow, you can switch to script mode using the toggle to access the same AI assistance as in the script editor — including the ability to add context like database schemas, diffs, and preview errors, along with granular apply/reject controls and quick actions.

Summary copilot for steps

From your code, the AI assistant can generate a summary for flow steps.

Step input copilot

When adding a new step to a flow, the AI assistant will suggest inputs based on the previous steps' results and flow inputs.

Flow loops iterator expressions from context

When adding a for loop, the AI assistant will suggest iterator expressions based on the previous steps' results.

Flow branches predicate expressions from prompts

When adding a branch, the AI assistant will suggest predicate expressions from a prompt.

AI form filling

The AI assistant can fill script and flow run forms based on your requirements. To enable it, go to the script or flow settings and enable "AI Form Filling". You will also be able to provide specific instructions to the AI on how to fill out the run form, making it adapt to your particular workflow needs.

CRON schedules from prompt

The AI assistant can generate CRON schedules from a prompt.

Models

Windmill AI supports:

- OpenAI's models (o1 currently not supported)

- Azure OpenAI's models

- Base URL format:

https://{your-resource-name}.openai.azure.com/openai

- Base URL format:

- Anthropic's models (including Claude 3.7 Sonnet in extended thinking mode)

- Mistral's Codestral

- DeepSeek's models

- Google's Gemini models

- Groq's models

- OpenRouter's models

- Together AI's models

- AWS Bedrock's models

- Required configuration: AWS region and long term API key

- Custom AI: base URL and API key of any AI provider that is OpenAI API-compatible

- For Ollama and other local models, select "Custom AI" and provide your Ollama server URL (e.g.,

http://localhost:11434/v1for local Ollama or your custom endpoint)

- For Ollama and other local models, select "Custom AI" and provide your Ollama server URL (e.g.,

If you'd like to use a model that isn't in the default dropdown list, you can use any model supported by a provider (like gpt-4o-2024-05-13 or claude-3-7-sonnet-20250219) by simply typing the model name in the model input field and pressing enter.